The AI Price Hike

Companies are bundling AI and raising software prices because they don’t have a choice.

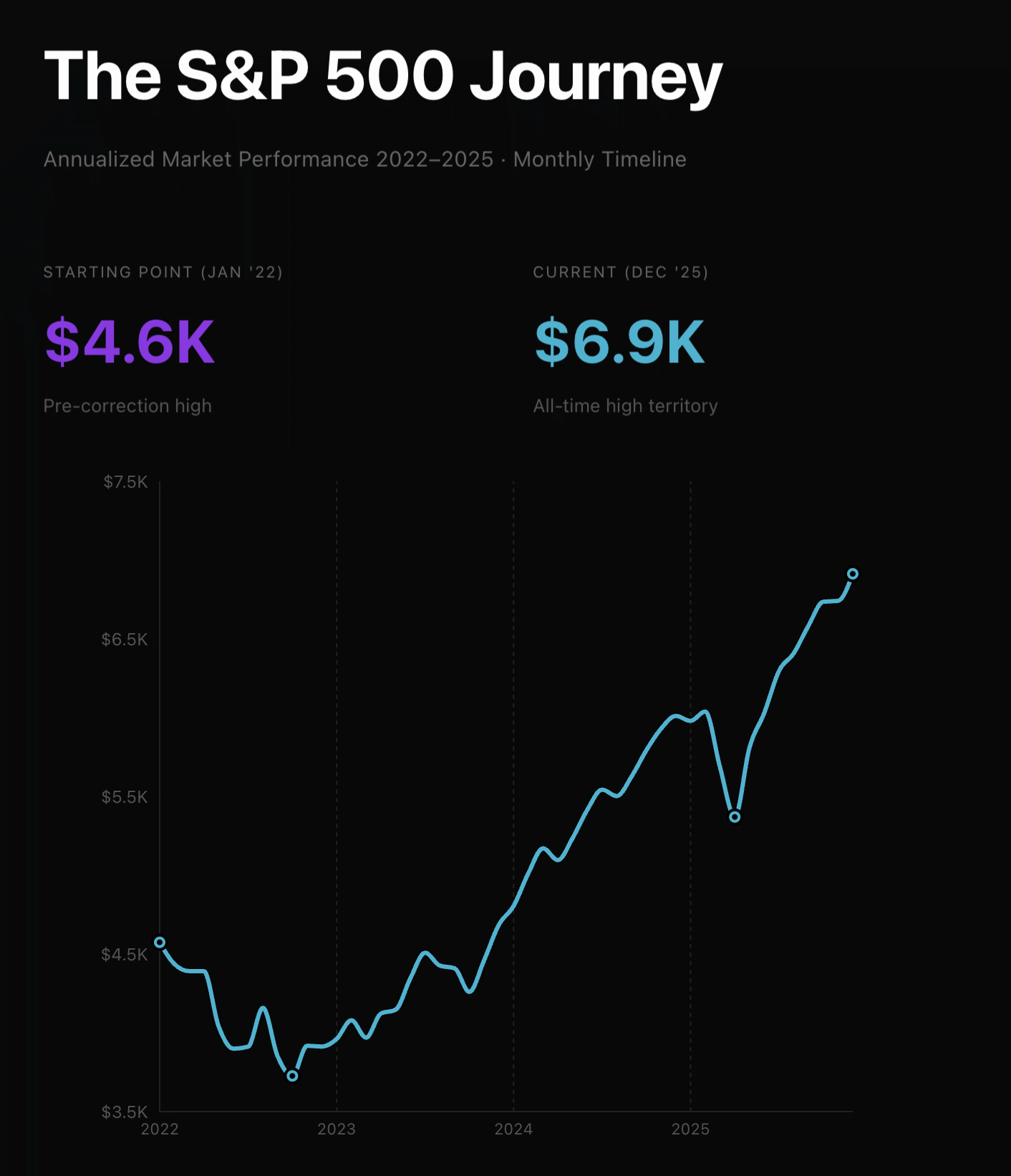

We should be in a recession. Interest rates jumped after COVID, the war and tariffs ruin global trade, while western countries are struggling with expensive energy, social spending, and competition from China. But the economy is growing and markets are breaking records. This is happening because of AI: it explains roughly 85% of US equity gains this year, and about half of the S&P 500 stocks are associated with AI.

Everyone comparing it to the dotcom bubble either doesn’t know about what happened in 2000 or misunderstands it. Even though the internet was early, for some reason people believed it’d change everything in a year or two. Investors poured millions into any companies with the world “internet” in their boilerplate despite little to revenue, relying on users alone. And by users I literally mean “signups” because nobody tracked Daily or Monthly Active Users back then.

Most of Mark Cuban’s fortune came from his dotcom project Audionet, a streaming platform for audio and video that he sold to Yahoo! for $5.7Bn in 1999, just in time before the party ended. You know what revenue they had? $13.5 million in the last quarter before the sale.

This is a typical S-curve of expectations: investors overestimated short-term effects but underestimated long-term ones. Because the internet did change the world and the tech companies dethroned oil corporations as world’s most expensive businesses. Pets.com launched in 1998 to delivery pet food and toys and shut down two years later— but the model is reasonable and you can easily order those things online today. They simply were too early and tried to induce the demand that wasn’t there yet.

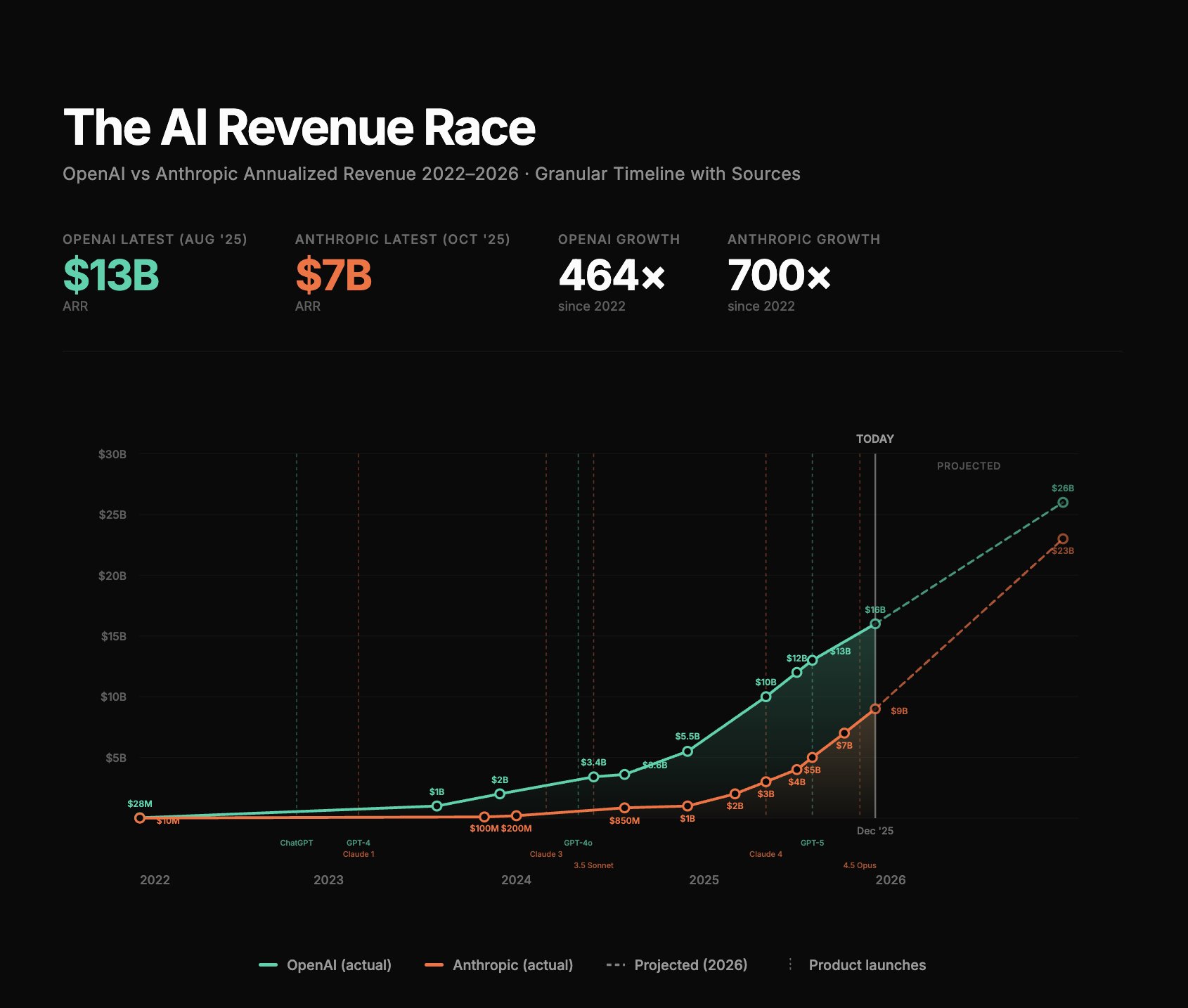

And unlike the dotcom companies, AI labs are printing money. OpenAI and Anthropic collectively generate $25 billion in revenue. These are the fastest-growing companies in the history of the world.

People argue whether Nvidia is going to bust, but its $4.4T market cap is far more reasonable when you look at their revenue — the just earn a lot of money. Nvidia trades around 45 P/E, which is within benchmarks for their industry and growth rates.

These companies are benefitting from AI directly by selling inference (directly or not). If we trust Altman, he said that OpenAI is profitable on inference and only loses money on training. But what about others, the ones who have to pay for these APIs and the included margins?

If you look around, AI seems to become the biggest differentiator for tech products and yet all of them have it. Task managers and notetakers that existed for years before have suddenly become AI-first if you look at the landing pages. Many apps and platforms have pivoted their entire businesses because the new opportunities are far bigger.

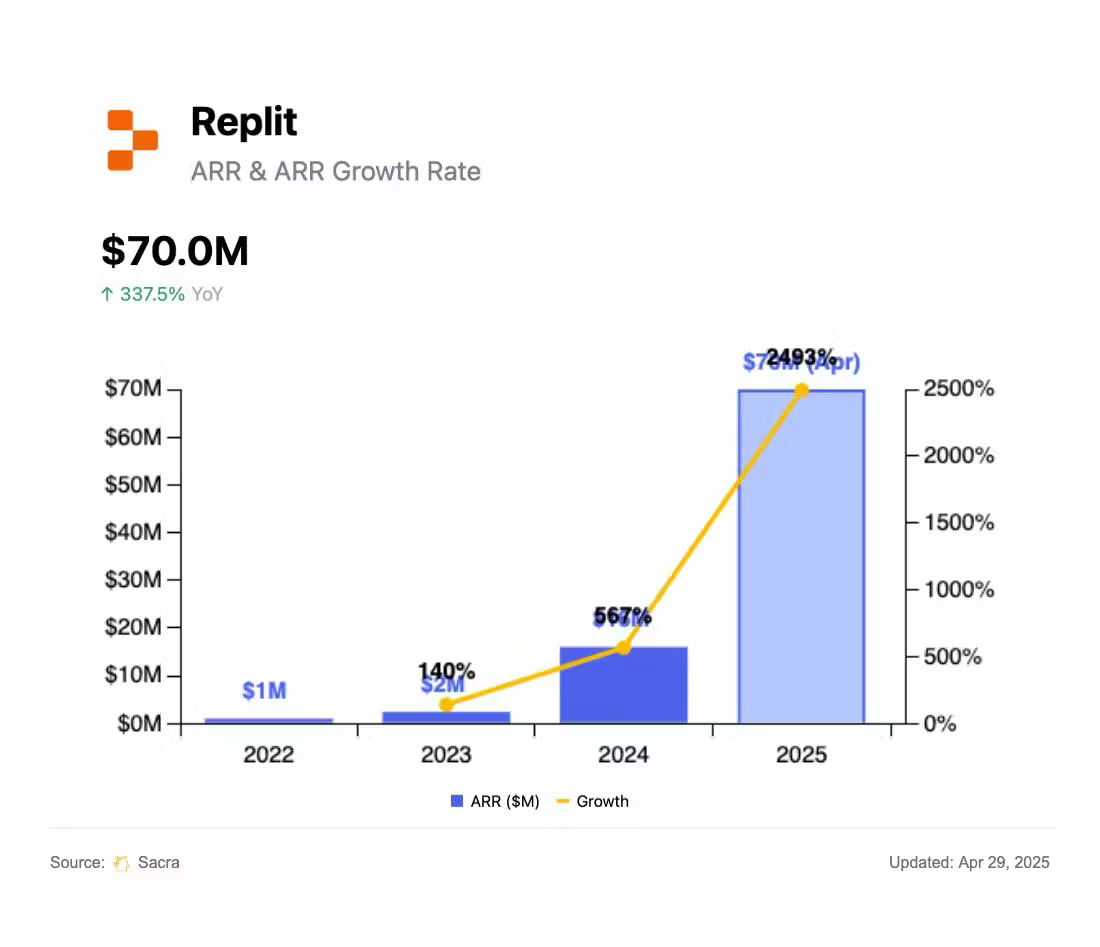

Replit started in 2016 as a web-first IDE available in the cloud, primarily targeting students. Last year they had slightly over $2M in ARR. This year, Replit shifted from a coding IDE to an AI-first agentic platform for creating app and suddenly grew to $150M in ARR. It is now projecting $1 billion in revenue by the end of 2026. The demand for AI coding is so massive even minor players get a lot of interest.

Cursor, an agentic IDE based on VS Code, was launched in 2023. This November they raised a funding round valuing them at $30Bn. That’s probably three times more than the entire company of JetBrains on the back of Cursor’s enormous revenue growth. Developers just love Cursor and can’t get enough of it.

But these are apps for developers. Software development is the most obvious use case for LLMs beside general knowledge retrieval and far more lucrative. Developers cost a lot already so anything that makes them more efficient is a good leverage and businesses are more willing to spend on it. Besides, programming languages have something that natural ones like English don’t — formal verification. The code is code, either it works or doesn’t, which makes it easier to iterate and improve LLMs’ capacity to produce code.

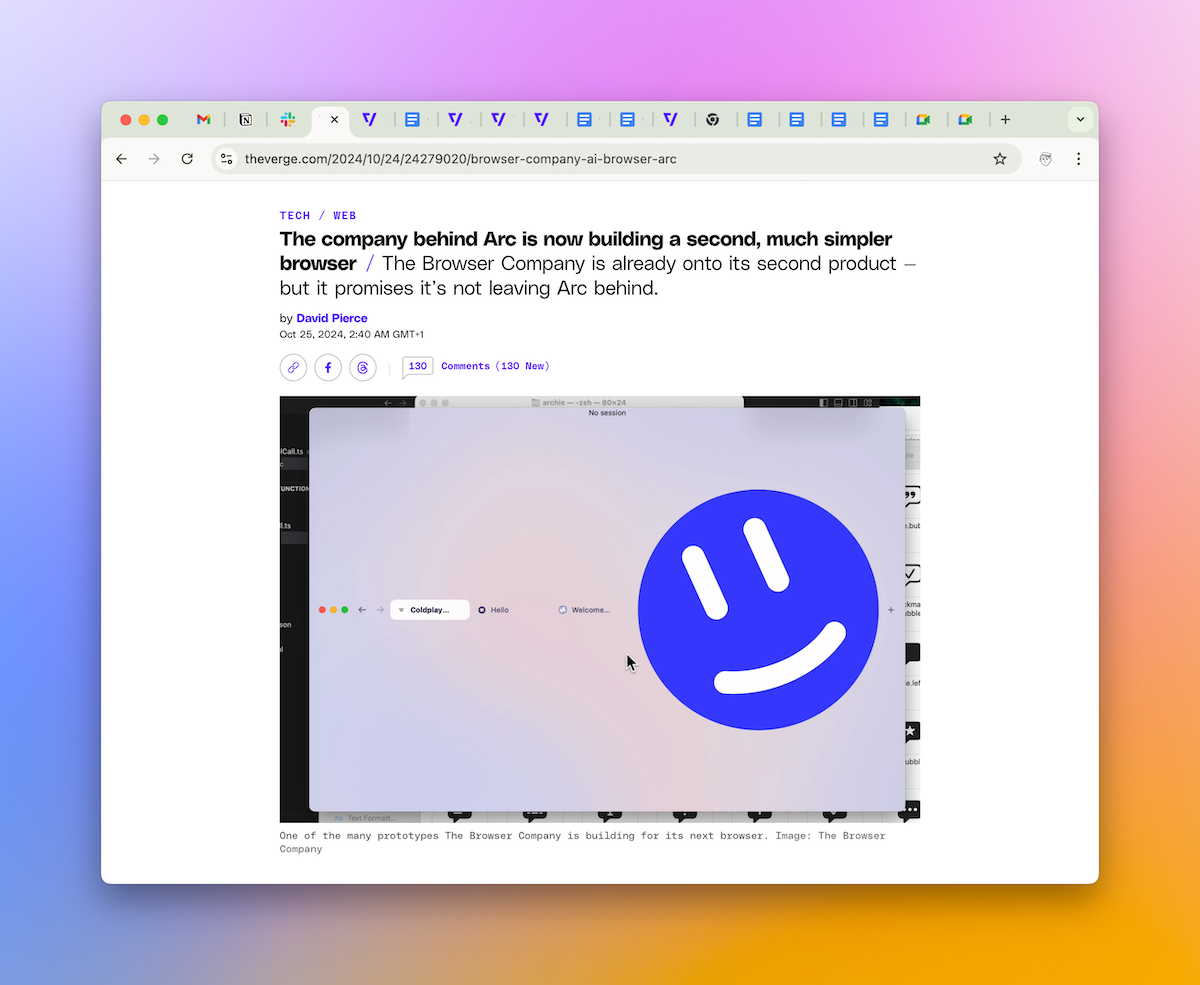

What about others? We’re not shy of examples, since every app is integrating AI now. Google pushes Gemini in Gmail and Docs. Notion has Notion AI everywhere in the app. I’ve recently gotten AI in Slack and it tries to do minor things there and summarises the threads that took place while I was away. Grammarly acquired Superhuman and relaunched as an AI productivity suite under its brand.

For many companies, AI has been a great excuse to raise pricing. Our Notion pricing has grown by +25% per user. Google Workplace raised the price by ~$2 for everyone because we bundled Gemini. Do we use Notion AI? No, but we can’t avoid paying for it, the same for Google Workplace. I suppose the silver lining is that if you actually use Gemini a lot, all other business users basically subsidise you at the moment. That’s how it works: prices are higher across the board to cover the inference costs but if most users barely touch AI the company’s margins are also higher.

But we need to distinguish solving clear pains with AI and bolting it on top of an existing solution with the hope it will take off. Adding AI just because you can is a cargo cult. Raycast an indispensable Mac app, tried adding this to their subscription in the hope to justify it. Eventually, they gave up and let anyone add their own API keys.

People don’t want to pay ten times just to chat with a slightly different LLM in every app. For this, they can just use ChatGPT, which is OpenAI’s ultimate moat and the reason to exist. Apart from this, the real advantages of LLMs come not from chatbots but specific little features enabled by them.

That’s how you get a market where software got expensive across the board not because AI created value, but because companies used AI as cover to raise prices while their competitors were doing the same thing.

I talked to several operators across some of the largest tech scale-ups. All of them are frightened. The common belief is that you have to integrate AI if only because all of your competitors are doing so. It’s the Red Queen hypothesis where “it takes all the running you can do, to keep in the same place”. And they are not crazy, just look at Cursor again and all the competitors they were able to overtake solely because of AI.

Companies that were building products for years expect them to have a moat at least in terms of features and capabilities. Turns out, AI can replace the need for most of this functionality altogether and even if it’s not great yet, OpenAI, Google and Anthropic are burning billions on the next training run of the next SOTA model anyway. The models will only get better.